Scrape Restaurants Data | Deliveroo | Talabat | Careem

Introduction

To scrape restaurant data from websites like Deliveroo, Talabat, and Careem is a potent strategy for gaining valuable insights into the ever-evolving culinary landscape. This process unveils a wealth of information for businesses and enthusiasts alike, offering a nuanced understanding of trending cuisines, popular dishes, and competitive pricing.

By extracting details like restaurant names, cuisines, locations, ratings, and customer reviews, scraping enables stakeholders to stay informed about consumer preferences and industry trends. This data can guide strategic decisions, from menu planning to pricing strategies, fostering a competitive edge in the market.

Web scraping also empowers businesses to monitor their competitors, identify emerging culinary trends, and adapt to evolving consumer preferences. For enthusiasts, it opens a window into diverse culinary experiences and helps discover hidden gems within the vast array of options.

Why Scrape Restaurants Data from Deliveroo, Talabat and Careem?

Deliveroo food delivery data collection, Talabat food delivery data collection, and Careem food delivery data collection offers numerous advantages for businesses, researchers, and enthusiasts seeking insights into the culinary landscape. Here are several compelling reasons to engage in this practice:

Market Intelligence

Accessing data from these platforms provides valuable market intelligence. Businesses can analyze trends, identify popular cuisines, and understand consumer preferences to tailor their offerings.

Competitor Analysis

Scrutinizing the competition becomes more effective by scraping restaurant data. Businesses can track competitors’ menus, pricing strategies, and customer reviews, allowing for strategic adjustments to stay competitive.

Menu Planning and Optimization

For restaurant owners and chefs, scraping data assists in menu planning and optimization. Understanding which dishes are trending or receiving positive reviews helps craft menus that resonate with customers.

Pricing Strategies

Analyzing pricing structures across various restaurants aids in developing effective pricing strategies. Businesses can set competitive prices while maintaining profitability.

Customer Reviews and Feedback

Scraping customer reviews provides valuable feedback businesses can use to enhance their services. Understanding customer sentiments helps in improving overall customer satisfaction.

Discovering New Trends

Enthusiasts and food researchers can leverage scraped data to identify emerging culinary trends. This information is valuable for exploring new flavors, cuisines, and unique dining experiences.

Geographical Insights

Scraped data often includes location information, offering insights into the geographical distribution of restaurants. This is beneficial for businesses considering expansion or targeting specific markets.

Data-Driven Decision Making

Businesses can make informed decisions backed by real-time and historical data. This approach fosters agility and adaptability in responding to consumer behavior or market trend changes.

Strategic Partnerships

For delivery service providers like Talabat and Careem, scraping data can identify potential partnerships with popular and trending restaurants, improving the variety and quality of their service offerings.

Enhanced User Experience

Platforms can leverage scraped data to enhance the user experience by providing personalized recommendations, showcasing popular dishes, and optimizing search functionalities.

Process to Follow to Scrape Restaurants Data from Deliveroo, Talabat and Careem

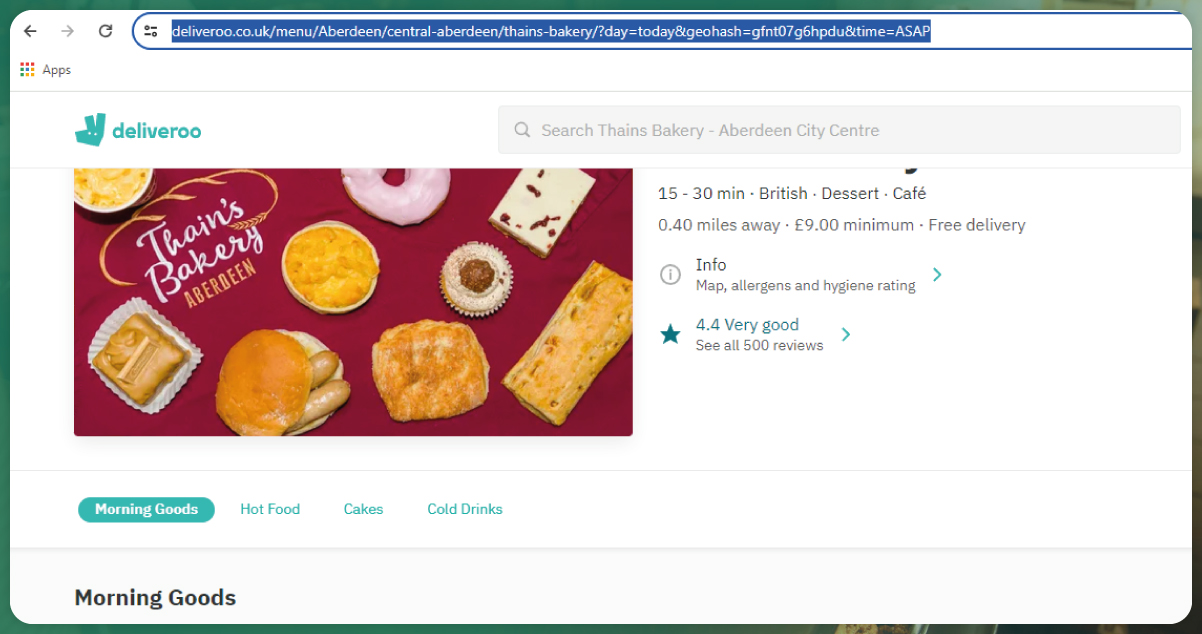

Embarking on the journey to scrape Talabat food delivery data requires a strategic approach to ensure accuracy, compliance, and meaningful insights. Let’s delve into a comprehensive guide from identifying target URLs to visualizing scraped data.

1. Identifying Target URLs

Before initiating the scraping process, meticulously gather the URLs of the specific restaurant pages you aim to extract information from. Delve into the intricacies of the page structure, comprehending the HTML layout to streamline the subsequent scraping steps.

2. Utilizing Requests and BeautifulSoup

Employ the requests library to retrieve the HTML content of the restaurant pages efficiently. Subsequently, leverage the power of BeautifulSoup for HTML parsing, enabling the extraction of pertinent details such as restaurant names, cuisines, ratings, and customer reviews.

3. Navigating Dynamic Content

In instances where websites incorporate dynamic content loading, often driven by JavaScript, consider incorporating tools like selenium to ensure the comprehensive capture of all relevant data. This step ensures a thorough extraction, especially when dealing with elements that load dynamically based on user interactions.

4. Extracting Data

Identify the HTML tags and classes housing the desired restaurant information. Extract critical details such as the restaurant name, cuisine type, location, ratings, and customer reviews. This phase forms the backbone of your data collection process, providing the foundation for insightful analysis.

5. Storing Data

Implement a robust data storage solution like a relational database like SQLite or MySQL. Persistent storage ensures the compilation of a comprehensive repository over time, facilitating historical analysis and trend tracking in the dynamic restaurant landscape.

6. Respecting Website Terms of Service

Adherence to ethical scraping practices and strict compliance with the terms of service of each platform is paramount. This approach ensures legal compliance and fosters a responsible and sustainable data collection process.

7. Scheduled Scraping (Optional)

For those seeking real-time and updated insights, consider implementing scheduled scraping. Utilize tools like cron to automate your scraper, enabling periodic runs at predefined intervals. This feature is handy for staying abreast of changes and additions to restaurant data.

8. Implementing Robust Error Handling

Account for the unpredictable nature of the web environment by incorporating robust error-handling mechanisms. These safeguards mitigate issues arising from network fluctuations, website structural changes, or unexpected errors, ensuring the reliability and resilience of your scraping script.

9. Visualization (Optional)

For a holistic understanding of the scraped data, consider incorporating visualization techniques. Use charts or graphs to present trends and patterns, transforming raw data into actionable insights to guide strategic decision-making.

Conclusion

At Actowiz Solutions, we emphasize the significance of tailoring your scraping script to the unique structure of each platform. This adaptive approach, combined with our unwavering commitment to ethical practices and legal compliance, guarantees that the data extracted becomes a valuable asset for your business. Elevate your restaurant data collection endeavors with Actowiz Solutions – where customization meets compliance. For tailored Deliveroo food delivery data scraping services that transform data into actionable insights, contact Actowiz today. Make your data work for you, ensuring it enriches your understanding and aligns seamlessly with your business goals. Act now, and let Actowiz Solutions be your data optimization partner. You can also reach us for all your mobile app scraping, instant data scraper and web scraping service requirements.

sources >> https://www.actowizsolutions.com/scrape-restaurants-data-from-deliveroo-talabat-and-careem.php

Tag : #ScrapeRestaurantsData

#ScrapingRestaurantData

#Deliveroofooddeliverydatascraping

#Talabatfooddeliverydatacollection

#Careemfooddeliverydatacollection