How To Perform Web Data Scraping For UK And Ireland Student Clubs And Societies

How To Perform Web Data Scraping For UK And Ireland Student Clubs And Societies?

In today’s digital era, data has become an invaluable asset, serving many purposes ranging from academic research and market analysis to networking and beyond. Within this vast realm of data, there exists a particular treasure trove that captivates the interest of many: information concerning student societies at universities. These student societies offer students unique avenues for personal growth, professional development, social connections, and active involvement in extracurricular pursuits. In the following article, we embark on a journey to uncover the techniques for extracting this valuable data – a guide to scraping information on student societies from universities in the United Kingdom and Ireland. Our primary focus will be the illustrious Russell Group of universities data scraping.

List of Data Fields

- First name

- Surname

- Society name

- Social media links

- Contact number (if available)

- Targeted Universities:

- Description

- Meeting Time and Locations

- Membership Requirements

- Photos and Media

- Affiliations

- Society Size

- Fundraising and Sponsorship

A Closer Look at Web Scraping Fundamentals

Web scraping is a powerful and intricate process that automatically retrieves website data. When your objective is to scrape student clubs and societies across the UK and Ireland, some essential tools and steps come into play. Here’s a detailed breakdown of the critical components:

- Select a Programming Language: To embark on a successful web data scraping UK and Ireland student clubs and societies, you must choose a programming language. Python, with its rich ecosystem of libraries, is a favored choice for many. Its versatility and readability make it an ideal candidate for web scraping projects.

- Leverage Specialized Libraries: While a programming language provides the foundation, specific libraries like Beautiful Soup and Requests play pivotal roles in web scraping. Beautiful Soup is used for parsing and navigating HTML content, while Requests simplifies the process of making HTTP requests to fetch web pages. These libraries significantly ease the task of data extraction.

- Understanding the Website’s Policies: Before commencing with web scraping campus data, it’s imperative to take a cautious approach by thoroughly reviewing the terms of service and policies of the website you intend to scrape. This step ensures that your scraping activities follow the website’s rules and guidelines. Violating a website’s terms can lead to legal consequences or getting blocked from accessing the site.

- Respect Robots.txt: Additionally, websites often provide a robots.txt file that specifies the specific crawling parts of the site web scrapers. It’s a best practice to adhere to the directives in the robots.txt file to maintain ethical and respectful scraping practices.

Steps to Scrape Data from Russell Group of Universities

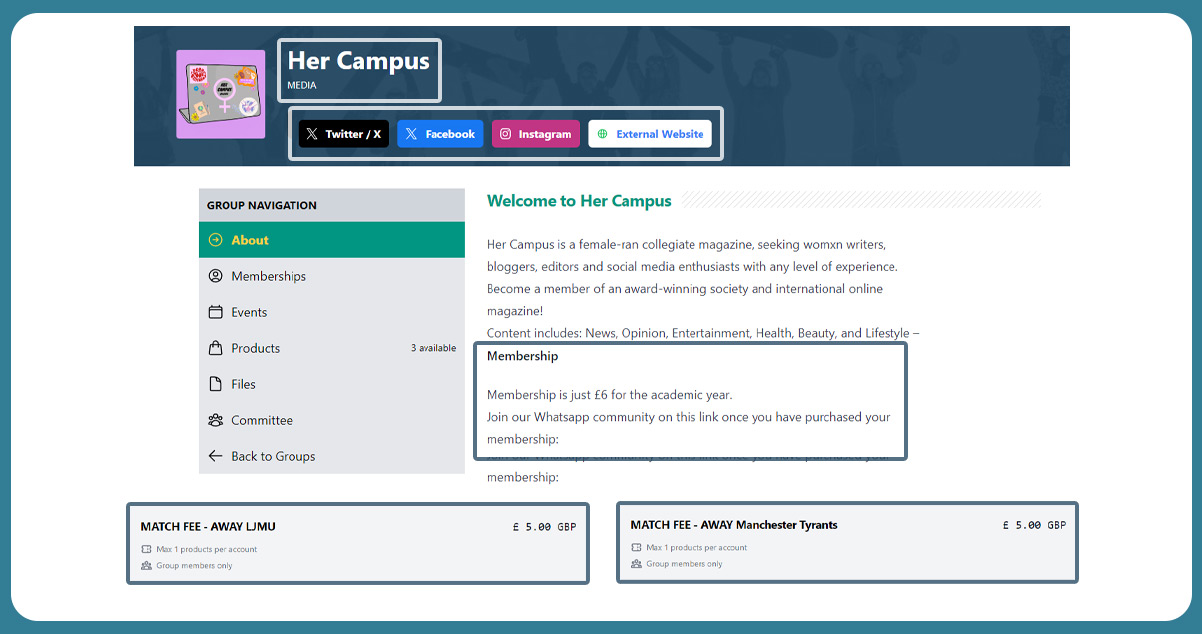

The Russell Group is a collection of 24 leading UK universities known for their research and teaching excellence. To scrape data on student societies from Russell Group universities, we can start with the Leeds University Union (LUU) website at https://engage.luu.org.uk/groups?utm_source=luuorguk&utm_campaign=clubsocpage.

Here are the detailed steps for scraping data from Russell Group universities using Python, Beautiful Soup, and Requests. We’ll also provide some code snippets to illustrate each step.

Step 1: Install the Necessary Libraries

You’ll need Python, Beautiful Soup, Requests, and potentially Selenium. You can install these libraries using pip:

pip install beautifulsoup4 requests

If you intend to use Selenium, you can install it with:

pip install selenium

Step 2: Inspect the Website

Open the LUU student society page (https://engage.luu.org.uk/groups?utm_source=luuorguk&utm_campaign=clubsocpage) in your web browser. Right-click on the web page and select “Inspect” or press F12 to access the browser’s developer tools. It will allow you to inspect the HTML structure of the page and identify the elements you want to scrape.

Step 3: Write a Scraping Script

Use Python along with Beautiful Soup and Requests to create a scraping script. Here’s a basic script demonstrating retrieving and parsing data from the LUU website. We assume you want to extract the society names, descriptions, and contact information:

import requests

from bs4 import BeautifulSoup

# Define the URL of the LUU student society page

url = “https://engage.luu.org.uk/groups?utm_source=luuorguk&utm_campaign=clubsocpage”

# Send an HTTP GET request to the URL

response = requests.get(url)

# Check if the request was successful (status code 200)

if response.status_code == 200:

# Parse the HTML content of the page

soup = BeautifulSoup(response.text, ‘html.parser’)

# Find and extract the data you need

society_names = [name.text for name in soup.find_all(“element selector for society names”)]

descriptions = [desc.text for desc in soup.find_all(“element selector for descriptions”)]

contact_info = [info.text for info in soup.find_all(“element selector for contact information”)]

# Print the extracted data (you can also save it to a file or database)

for name, desc, info in zip(society_names, descriptions, contact_info):

print(“Society Name:”, name)

print(“Description:”, desc)

print(“Contact Information:”, info)

else:

print(“Failed to retrieve the webpage. Status code:”, response.status_code)

In the script above, you would replace “element selector for society names,” “element selector for descriptions,” and “element selector for contact information” with the actual HTML element selectors that match the data you want to scrape.

Step 4: Loop Through the Pages (if needed)

If society data is across multiple pages, you can create a loop to navigate each page and scrape society’s data until you’ve covered all the societies. You’ll need to adjust the URL and pagination logic in your script accordingly.

Step 5: Store the Data

To store the scraped data, you can save it in a structured format like a CSV file or a database. You can use Python libraries like CSV for CSV files or a database library like SQLite3 for SQLite databases.

Expanding to Other UK and Irish Universities

Once you’ve successfully scraped data from Russell Group universities, the logical next step is to broaden your project’s scope to encompass a broader range of higher education institutions throughout the UK and Ireland. This endeavor can be pretty ambitious, but by following these steps and adhering to essential legal and ethical considerations, you can conduct web scraping with confidence using web scraper:

1. Collect a List of Target Universities:

The first phase involves assembling a comprehensive list of universities in the UK and Ireland. To achieve this, you may leverage official sources, academic directories, or government websites. Additionally, consider utilizing existing datasets or APIs that provide information about universities to streamline this process.

2. Write a Generic Scraping Script:

Your initial scraping script, tailored for Russell Group universities, must be modified to function with diverse university websites. Different institutions may possess unique webpage structures and layouts, so your script must be adaptable. Consider using a modular approach where you can customize element selectors and scrape logic for each specific university’s student society page.

Utilize Python functions and classes to encapsulate the scraping logic, making it easier to plug in new universities as you expand your project. This abstraction enhances the maintainability and scalability of your scraping script.

3. Automate the Process:

When you’re dealing with a substantial number of universities, manual scraping can become impractical and time-consuming. Automation is critical to efficiency. Tools like Selenium, a web automation framework, can be a valuable asset. Selenium allows you to interact with web pages, navigate through them, and scrape data more efficiently. By scripting browser interactions, you can automate tasks such as clicking through multiple pages, filling out forms, and handling dynamic content.

Configure Selenium to simulate human-like behavior by incorporating random delays between requests. It not only ensures that your scraping activity is more discreet but also reduces the risk of being flagged as a bot by website administrators.

4. Handle Exceptions:

As you delve into a diverse landscape of university websites, expect variations in webpage structures and unexpected issues. It’s imperative to include exception handling in your script to maintain a robust and reliable scraping process. It will allow your script to gracefully deal with errors, such as connection problems, missing data, or changes in the website’s layout.

Implement robust error-handling mechanisms, logging, and alert systems to identify and address issues as they arise promptly. This proactive approach is instrumental in maintaining the integrity of your data collection process.

Legal and Ethical Considerations:

When venturing into web scraping, it’s paramount to be conscientious about the legal and ethical aspects of the practice. Here are some key considerations:

Review Terms of Service: Always scrutinize the terms of service and usage policies of the websites you intend to scrape. Ensure your scraping activities comply with these guidelines. Violating a website’s terms can lead to legal repercussions.

Respect Robots.txt: Check the website’s robots.txt file to understand which parts of the site are forbidden for scraping. Following to the directives in the robots.txt file is a best practice that reflects respect for the website owner’s wishes.

Rate Limiting: Avoid overloading a website’s servers with an excessive number of requests in a short period. Implement rate limiting to avoid hindrance to your scraping activities and normal functioning of the website.

Privacy and Data Protection: If your scraping involves personal data, be aware of privacy and data protection laws, such as the General Data Protection Regulation (GDPR) in the European Union. Handle sensitive data responsibly and securely.

Conclusion: Scraping data on student societies from universities in the UK and Ireland can be a valuable project for academic research, marketing, or community building. With the right tools and knowledge and professional university data scraping services, you can extract this information from university websites. Remember to always adhere to ethical and legal guidelines while conducting your scraping activities, and be prepared to adapt your scripts to the varying structures of different university websites.

Know More:

https://www.iwebdatascraping.com/web-data-scraping-for-uk-and-ireland-student-clubs-and-societies.php

#WebDataScrapingForUKAndIrelandStudentClubs,

#scrapinginformationonstudentsocietiesfromuniversities,

#RussellGroupofuniversitiesdatascraping,

#scrapestudentclubsandsocieties,

#webscrapingcampusdata,

#scrapingdatafromRussellGroupuniversities,