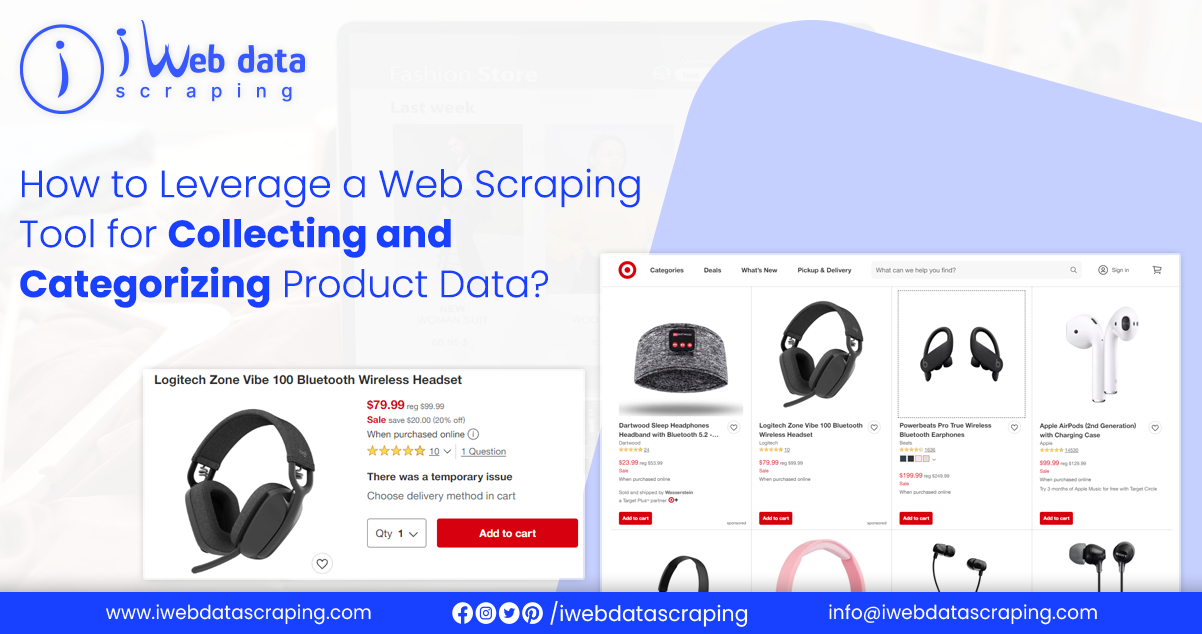

How To Leverage A Web Scraping Tool For Collecting And Categorizing Product Data?

We all know that extracting massive data via the manual process from any website consumes lots of time and effort. And this is true that “Time is Money.” Devoting excess hours only to extracting the data may keep you focused on other tasks. Hence, this is where scraping E-Commerce product data comes into existence. It makes the job easier and faster and can extract and save the data in the desired format. So, buckle up! As we are going to describe to you how to make a basic extraction tool and create your version in Python.

All About Web Scraping ?

It is an automated data extraction process used to collect unstructured information from the website and structure it in the desired layout, making it easy to read. You can use online services, APIs or do it yourself to perform this.

When it comes to comparing the prices from multiple retailers, product information is essential. You can achieve product data scraping manually, but it takes a lot of time when there are many products. Perform the process with the help of a programming script to extract values from web pages. As websites are structured differently, it is essential to specify the script to extract values.

List of Data Fields

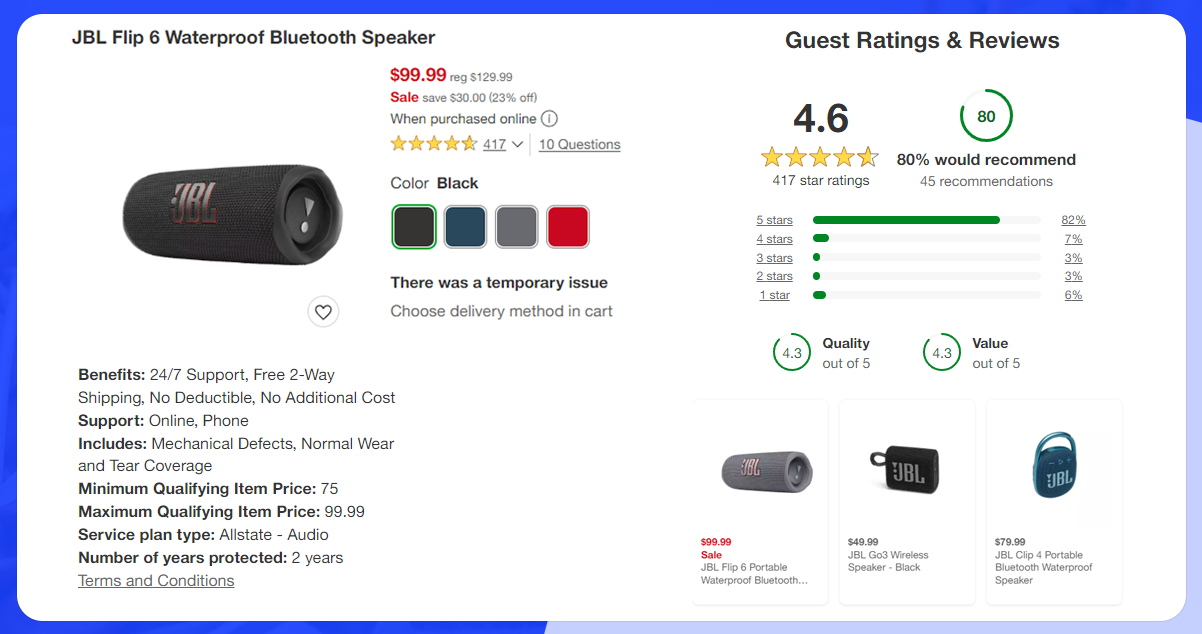

The following list of data fields is available from extracting data from product pages.

- Product Name

- Description

- Price

- Image

- Rating

- Specifications

- Variations

- Availability

- Reviews

- Categories

- Brand

- Features

Extracting Data from Different Websites

Website data extraction is the best skill for many applications, including data mining, analysis, and automating repetitive tasks. With the vast amount of data available on the internet, extracting data can provide deep understanding and help you make informed decisions.

- Finance companies can use the extracted data to make data-driven decisions about buying and selling things at the right time.

- The travel industry can track prices from their target market and gain a competitive advantage.

- Restaurants can scrape review data and can make necessary changes if required.

Methods of Extracting Data From a Website

Although several methods exist to scrape eCommerce and retail data from a website, the best method depends on the specific needs and the website structure. Listed below are some of the standard methods:

- Manual Copy Paste Method: This is the simplest method of extracting and pasting the data into a spreadsheet or other document. It is applicable when there is a small amount of data.

- Web Browser Extension: Install these extensions in your web browser to enable you to select and extract specific data based on your requirements.

- Official Data APIs: Several websites offer API to help access the data in a structured format. This scraping API is the most straightforward way to collect data from a website in an organized and ready-to-use format.

- Web Scraping Services: : If you wish to refrain from handling proxies and headless browsers, you can seek professional help from product data scraping services. They are well-versed in handling the technical aspects of data collection.

- Designing Own Scraper: You use libraries like BS4 to extract necessary data.

Before delving deep into the step-by-step guide to generate your Python web scraping tool for collecting and categorizing product data, let’s understand its importance.

Importance of Web Scraping Product Data

The process of extracting data from websites, is essential in various fields and industries. Here are some key reasons why this process is essential:

Data Collection and Analysis: Web data scraping enables the collection of large amounts of data from websites in a structured format. This data is helpful for analysis, research, market intelligence, and decision-making. It allows businesses and individuals to gather valuable information from multiple sources efficiently and at a larger scale.

Market Research and Competitive Intelligence: It is essential for conducting market research and gathering competitive intelligence. Scrape data from e-commerce websites to help businesses monitor competitors’ activities, pricing strategies, product offerings, and customer reviews. By analyzing scraped data, companies can identify market trends, customer preferences, and areas for improvement, gaining a competitive edge.

Real-time Data Monitoring: Many websites frequently update their data, such as stock prices, news articles, or social media trends. It enables real-time monitoring of such data, allowing businesses to stay updated with the latest information and make timely decisions. Real-time data collection is particularly valuable in finance, news, social media monitoring, and e-commerce industries.

Lead Generation: It helps extract contact information from websites, including email addresses, phone numbers, or social media profiles. This data is helpful for lead generation, customer acquisition, and sales prospecting. Web data extraction helps identify potential leads, target specific industries or demographics, and streamline sales efforts.

Content Aggregation: It allows the aggregation and monitoring of content from various websites. It helps in enhancing user experience and provides users with comprehensive and updated information.

Gathering Mailing Addresses: Companies engrossed in promoting themselves require several mailing addresses to reach the target audience. The advanced web scraping tool can help download contact information from the targeted websites.

Pricing optimization: Collect the pricing data to understand your competitors’ strategies to fix the charges for a product or service. Continuously keeps track of the ever-changing markets.

Social Media: Web scraping social media data using Web scraper can help determine trends and how to take charge of the changing market and stay ahead of the competition. It will help understand the customer’s sentiment about your products or brands.

Why Use Python?

Python is a well-known and easy-to-use programming language. Listed below are some of the significant advantages that will let you know what makes Python the best option:

- Easy-to-Read Syntax: Due to its clean syntax, it is named an “executable pseudocode.” The indentations used to indicate blocks make it easy to read option.

- Easy to Use: It doesn’t require semicolons (;) or curly braces ({}) to indicate a block. The code appears less messy and readable due to the indentations.

- Community: The Python community is continuously expanding every day. If you get stuck in the code at any time, you can seek help.

- Huge Library Collections: Python has several valuable libraries, such as Selenium, BeautifulSoup, and Pandas. All these libraries are helpful for data extraction.

- Dynamically Typed: This depicts that the variable type is determined only during runtime. It saves us some precious time.

- Less Writing: Lengthy several codes doesn’t mean good code in Python. In Python, small fragments of code can do a lot. Hence, it saves enough time while writing code.

Categorizing Extracted Information Using Web Scraping Tools

Categorizing extracted information using web scraping tools typically involves organizing the collected data into specific categories or groups based on predefined criteria. Here are the general steps to categorize extracted information using these tools:

Define Categories: Determine the categories or groups you want to organize the extracted information. For example, if you are parsing data from an e-commerce website, your categories could be “Electronics,” “Clothing,” “Home Appliances,” etc.

Identify Key Data Fields: Identify the relevant data fields to help classify the information. For instance, the “Category” or “Product Type” field extracted would be crucial for classification if you are categorizing products.

Extract and Clean Data: Use tools or libraries to extract the required data from the website or app. Ensure the extracted data is clean and formatted correctly, removing unnecessary characters or inconsistencies.

Create Data Structures: Organize the categorized data into appropriate data structures. Each category can be in a separate data structure containing the relevant records.

Save Categorized Data: Choose an appropriate method to store the categorized data. It can be a local database, a spreadsheet, or other storage system that suits your needs.

Validate: Review the results of categorization to ensure accuracy and make any necessary adjustments or refinements to the categorization.

Web scraping tools or libraries, such as BeautifulSoup, Scrapy, etc., provide the necessary functionality to extract and manipulate data. Depending on the complexity of the categorization task, you may need to customize the code and implement additional logic for categorization.

For further details, contact iWeb Data Scraping now! You can also reach us for all your web scraping service and mobile app data scraping needs.

know more :https://www.iwebdatascraping.com/web-scraping-tool-for-collecting-and-categorizing-product-data.php

#WebScrapingTool

#scrapeeCommerceandretaildata

#scrapingECommerceproductdata

#WebScrapingProductData

#CollectingAndCategorizingProductData