LiDAR annotation is transforming industries from autonomous driving to urban planning, construction, and environmental management. By converting raw 3D scans into structured data, it enables safer navigation, smarter planning, and more efficient resource use. With the market set to hit $3.7 billion by 2028, its impact is only accelerating.

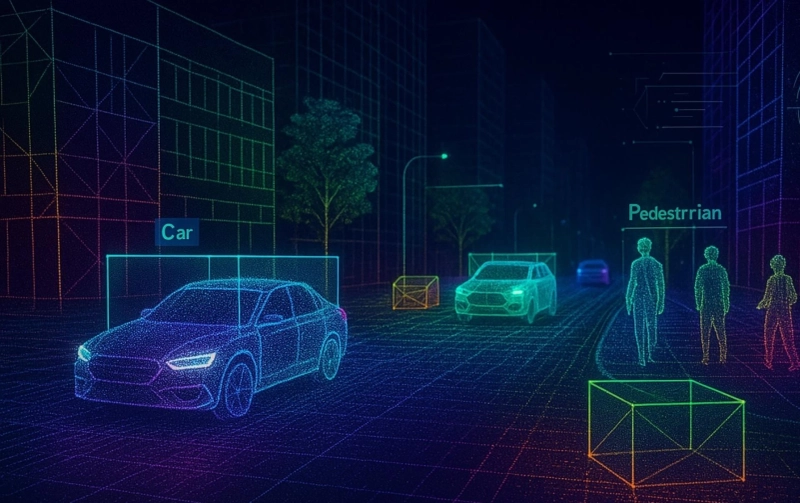

Artificial intelligence depends on quality data. From autonomous vehicles to smart city infrastructure, AI systems need precise, real-world context to make safe decisions. Traditional cameras capture detailed 2D visuals, but they fall short when it comes to depth and spatial accuracy. That’s where 3D point cloud annotation services step in. By turning raw LiDAR scans into structured training datasets, these services give AI the ability to understand distance, movement, and spatial relationships with remarkable accuracy.

LiDAR (Light Detection and Ranging) technology makes this possible. It emits laser beams, measures their return times, and generates millimeter-accurate 3D maps of environments regardless of weather or lighting. But for AI to use this data effectively, it needs careful annotation. Below, we’ll break down the five concepts you need to know for successful 3D LiDAR annotation.

How LiDAR Works and Why Its Data Formats Matter

LiDAR works on a simple principle: send laser pulses, measure their return, and calculate distances. Modern LiDAR systems emit hundreds of thousands of pulses per second, creating dense, detailed point clouds.

Two critical formats dominate AI training workflows:

- Sequential Point Clouds – These don’t just freeze a moment; they capture a stream of moments. That continuity is crucial for training AI to understand motion, like spotting when a cyclist is about to cross or when a pedestrian changes pace.

- 4D BEV (Bird’s Eye View + Time) – This transforms 3D spatial data into a clean overhead map, while also folding in time as a dimension. It’s especially valuable for autonomous driving because it simplifies the complexity of the real world into a layout an AI can process quickly, supporting faster and safer real-time decision making.

The Biggest Challenges in 3D Point Cloud Annotation

Annotating LiDAR data isn’t simple. It comes with technical and operational hurdles:

- Occlusion – Objects are often hidden behind others, making it difficult to label with certainty.

- Sparse and uneven density – Point density decreases with distance, requiring human judgment to interpret.

- Noisy or incomplete scans – Environmental factors like rain or reflections create ghost points.

- Blurred boundaries – Surfaces such as ground-to-vehicle edges are difficult to segment.

Operationally, annotation is:

- Time-intensive – Complex urban frames can take 15–30 minutes each.

- Mentally demanding – Annotators face fatigue and inconsistency due to repetitive, detail-heavy tasks.

The best practice today is human-in-the-loop annotation, where AI handles routine labeling, and humans resolve edge cases. This hybrid model combines efficiency with reliability especially critical in 3D LiDAR annotation services for safety-first applications like autonomous driving.

Key Annotation Types You Can’t Ignore

To train AI effectively, different annotation methods serve different purposes. The main ones are:

1. 3D Bounding Boxes (Cuboids)

· What it does: Encases objects in 3D cuboids to define position, size, and orientation.

· Best for: Vehicles, pedestrians, cyclists.

· Industry fact: Over 80% of autonomous driving perception tasks rely on bounding boxes (Waymo dataset).

· Challenge: Ineffective for irregular shapes like trees or animals.

2. Semantic Segmentation

· What it does: Labels every single point in the cloud by category (road, building, vegetation).

· Best for: Complex city scenes or indoor spaces.

· Challenge: Extremely detailed and time-consuming; requires strict consistency guidelines.

3. 3D Object Tracking

· What it does: Tracks objects frame-to-frame, assigning unique IDs.

· Best for: Predicting movement patterns and ensuring real-time collision avoidance.

Ensuring Quality with Human-AI Collaboration

Bad annotations equal bad AI. A mislabeled pedestrian or misjudged vehicle trajectory can lead to life-threatening errors in autonomous systems. That’s why quality control is non-negotiable.

Best practices include:

- Clear labeling guidelines that define class boundaries and address edge cases.

- Version control to track changes across large datasets.

- Continuous QC feedback loops where AI highlights uncertainty and humans verify.

In fact, Gartner notes that poor-quality training data can reduce AI accuracy by as much as 20–30%. That stat alone underscores the importance of high-precision Lidar Annotation Services.

Real-World Impact and Market Growth

LiDAR annotation has far-reaching applications:

· Autonomous Driving – Powers perception, detection, and localization.

· Urban Planning – Generates detailed 3D models for infrastructure and land-use assessment.

· Construction & BIM – Enables accurate models of existing structures and helps detect defects.

· Forestry & Environment – Supports biomass estimation, tree height measurement, and terrain analysis.

· Archaeology – Preserves cultural heritage through 3D site mapping.

· Aerial Surveying – Captures large-scale terrain and infrastructure models.

Key industry use cases and benefits include:

According to MarketsandMarkets, the global LiDAR market is projected to reach $3.7 billion by 2028, driven largely by the growing demand for 3D LiDAR annotation in autonomous systems.

· Automotive

Key use case: Object tracking and localization

Benefit: Safer autonomous navigation that improves decision-making for self-driving systems and reduces accident risks.

· Construction

Key use case: BIM and structural analysis

Benefit: Reduced defects and rework through better design insights, accurate simulations, and stronger project outcomes.

· Forestry

Key use case: Biomass estimation

Benefit: Smarter resource management that supports sustainability, harvest planning, and long-term ecosystem monitoring.

· Urban Planning

Key use case: City modeling

Benefit: Efficient infrastructure planning with better visualization, growth forecasting, and optimized land use.

Conclusion

High-quality 3D point cloud annotation is the backbone of reliable AI. From bounding boxes to semantic segmentation, the right mix of tools, processes, and 3D LiDAR annotation services defines whether AI systems perform safely and accurately in the real world.

As industries from automotive to environmental science embrace LiDAR, the demand for expert annotation will only grow. The future of AI depends on it, and success lies in balancing automation with human expertise to achieve scalable, trustworthy data labeling.